For two decades, digital reputation was built on links, rankings, and visible Google results. Today, that information architecture has quietly shifted elsewhere: to conversational AI models.

When someone asks ChatGPT who you are, what your company does, or if you’re trustworthy, the system doesn’t display links or indexed pages; it offers a summary that acts as an instant reputation assessment. This summary draws on what it finds online, but also on how it prioritizes, filters, and interprets those signals.

According to the ENISA report on AI and cybersecurity, one of the fundamental challenges for the effective use of AI models is the scarcity of high-quality public data, which limits their ability to generate reliable assessments.

ENISA also highlights that advanced machine learning methods can transform risk analysis, but their effectiveness depends on having consistent and accessible information.

This means that improving your reputation no longer depends solely on occupying prominent positions in Google, but on providing clear, verifiable, and structured information that AI can interpret without ambiguity.

In this context, concepts like reputational risk take on a new dimension. It’s no longer just about the impact of a crisis on public opinion, but about how AI interprets, summarizes, and disseminates it as a concise narrative that millions of users could potentially read.

How ChatGPT influences the public perception of your brand or name

Every interaction with ChatGPT generates a narrative, and that narrative becomes the first impression for thousands of people. The model doesn’t just respond; it legitimizes. Its neutral, concise, and confident tone creates the perception that its explanation is objective and complete, even when it may be based on partial information.

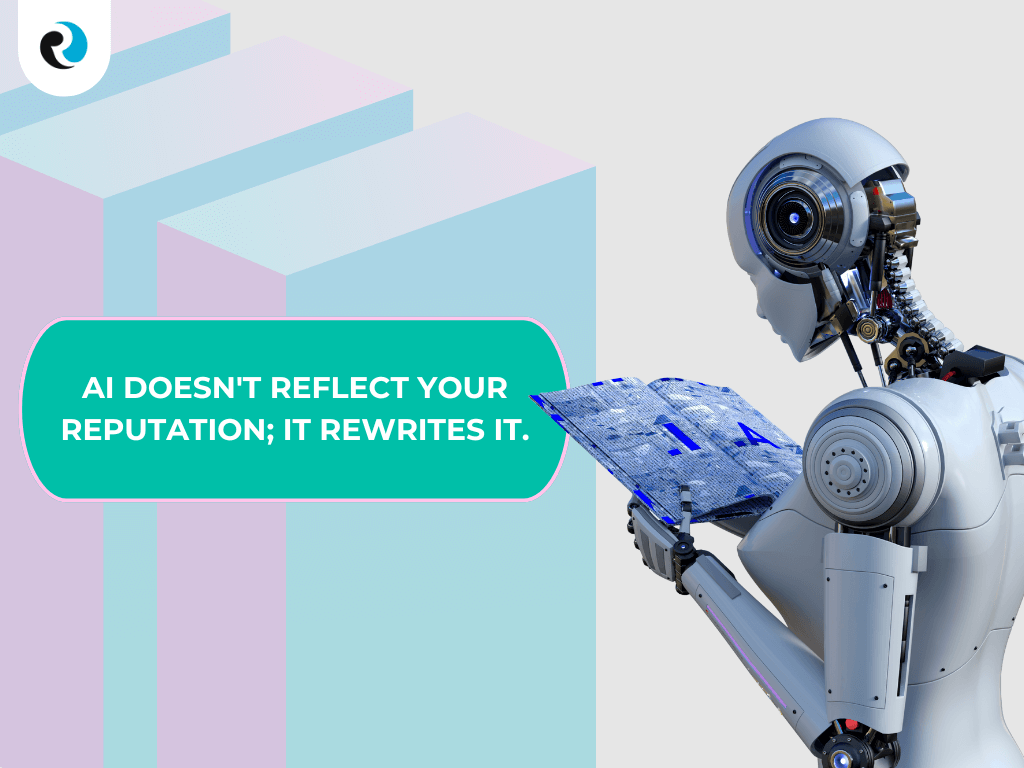

According to MIT CISR AI research, the adoption of artificial intelligence systems is transforming the way people and organizations process information and make decisions.

MIT notes that trust in generative models increases when they offer clear, structured, and seemingly neutral answers, making AI an informative mediator with a real capacity to influence perceptions, behaviors, and judgments about professionals or companies.

This ability to synthesize information—quick and authoritative—means that digital reputation no longer depends solely on what appears online, but on how models interpret and present that information to users.

How AI synthesizes your identity depends on every piece of available information: your website, your professional profiles, public mentions, and authority signals. Therefore, tools like Sentiment Analysis are essential for detecting emotional patterns and dominant perceptions that AI could incorporate into its responses.

Immediate strategies to improve your reputation on ChatGPT

Improving your reputation on ChatGPT involves acting not only on the information that AI consumes, but also understanding the principles that guide its development at an institutional level.

According to The European Commission’s strategy to turn Europe into an “AI continent” states that competitiveness in artificial intelligence depends on improving the quality of available data, adopting ethical technologies, and a robust infrastructure, fostering an ecosystem where AI-generated responses are more reliable, transparent, and aligned with democratic values.

Taking into account this European strategic vision, it becomes clear that digital reputation is no longer built solely on online presence, but also on how artificial intelligence, supported by public policies, can interpret and present your identity in a positive way.

For AI to understand who you are, it needs strong signals: question-and-answer content, complete biographies, detailed descriptions, and consistency across your various digital channels. This type of information allows the model to disambiguate identities, contextualize activities, and build more accurate descriptions.

Reputational authority is another critical component. The report NIST’s “Secure Software Development Practices for Generative AI and Dual-Use Foundation Models” emphasizes that AI models should be developed following risk management frameworks that ensure data integrity, control of its provenance, and the generation of evidence on how the systems have been built and evaluated.

When an organization rigorously documents its models, training data, and processes—and can demonstrate this through secure and traceable practices—it directly increases the trust of third parties: regulators, customers, and also the AI systems themselves that consume that information to synthesize who you are and what you do.

Furthermore, maintaining a consistent digital identity prevents the model from mixing incorrect signals.

Reputation analysis identifies inconsistencies, contradictions, or gaps that could lead to biased interpretations. As a final step, reputational cleanup is essential: AI doesn’t distinguish between fair and unfair information; it only identifies patterns. If it finds negative content, it can turn it into the core of the narrative.

Content that reinforces your authority and that AI prefers to quote

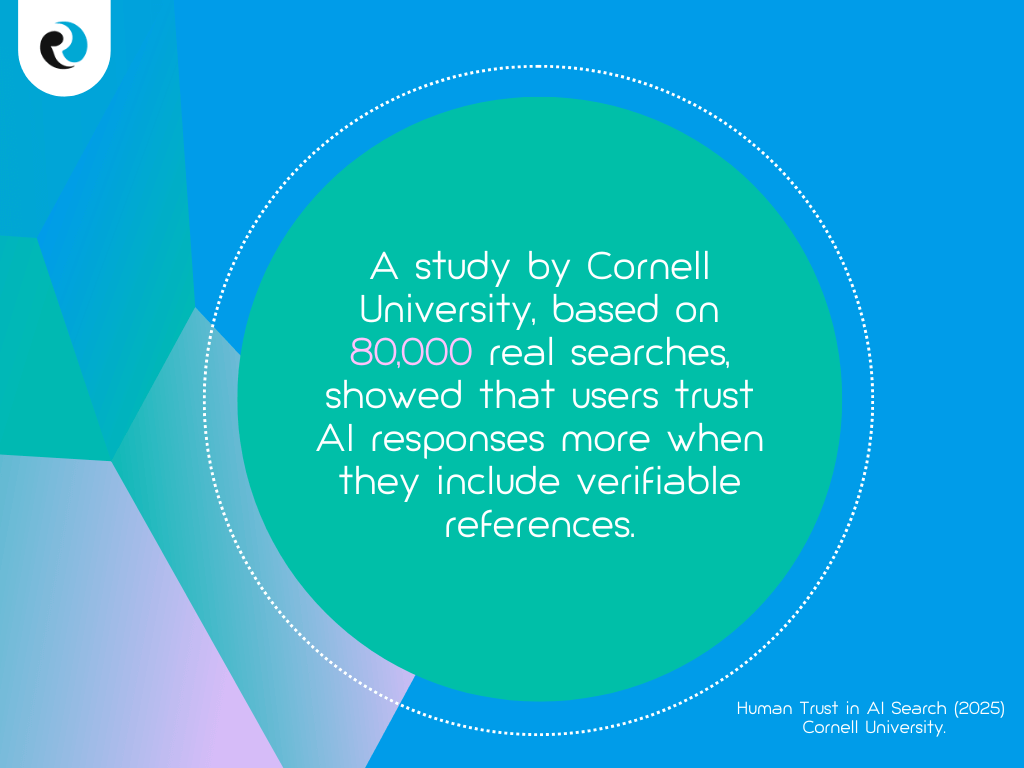

Models do not value all content equally, and Stanford’s AI Index Report 2025 confirms that data quality, structure, and traceability largely determine how generative systems interpret and produce information. The report highlights that the most advanced models rely on carefully documented and curated datasets, where provenance, context, and content consistency directly influence the reliability of the responses.

This means that organizations that generate verifiable information—such as case studies, technical research, or institutionally backed reports—provide stronger signals for AI to accurately synthesize identities. Consequently, reputational authority is built not only through traditional communication but also through the technical quality of the content that AI is able to interpret and reuse.

When you publish well-documented content with verifiable data and a clear structure, AI identifies it as a reliable source. If it also comes from a recognized organization—an educational institution, government agency, or industry entity—it becomes a reputational anchor within the narrative that ChatGPT uses.

Integrating advanced concepts like Google Alerts also helps strengthen your technical positioning, as AI uses these references to contextualize your level of digital competence.

Mistakes that destroy your reputation in AI models

One of the most dangerous mistakes is assuming that ChatGPT will understand your identity based solely on fragments of information. AI models tend to amplify any bias present in the data they analyze. When the input information is inconsistent, contradictory, or contains negative mentions, these signals can become the primary basis upon which the system builds its narrative. Therefore, even small errors or outdated information can disproportionately influence how the AI interprets and describes a digital identity.

Another common mistake is failing to monitor what ChatGPT is already saying about you. Algorithmic reputation evolves with each new signal, and if you don’t control the narrative the model is constructing, it can reinforce inaccurate interpretations. A lack of consistency across your various public channels also affects the perceived trustworthiness that AI assigns to your brand.

In crisis situations or digital incidents, especially those related to attacks or blackmail, expert tools like Cyberextortion allow you to act on malicious information before AI incorporates it as part of your public narrative.

How to measure if your reputation is improving within ChatGPT

Reputation in AI isn’t measured by clicks or traffic, but by narratives. When ChatGPT starts describing you with greater accuracy, consistency, and context, it means it has integrated new authority signals. The same is true when it stops mentioning old or negative information and begins including recent data that reflects your current identity.

Consistency across platforms is another key indicator: if AI accurately replicates your professional identity, it means the information you’ve published is reliable and verifiable. Tools like Online Reputation Analysis allow you to detect changes, monitor progress, and anticipate deviations.

To assess your progress, it’s advisable to regularly review how ChatGPT responds to various questions related to your name, company, or activities. Algorithmic reputation is dynamic: it changes with each new piece of content and every signal you introduce into the digital ecosystem.

Frequently Asked Questions (FAQ)

Because AI synthesizes information from multiple sources, it may encounter incomplete, contradictory, or outdated data. If you haven’t published a solid and accurate narrative, the model will fill in the gaps with interpretations that don’t always reflect reality.

The most influential signals are: verifiable content, complete professional biographies, institutional endorsements, case studies, and clear documentation of your activities. AI prioritizes structured and reliable data over superficial posts.

It typically takes between 30 and 90 days, depending on the consistency and quality of the published content. AI models integrate recent information more quickly when it comes from clear, professional, and authoritative sources.

Yes. Google organizes pages and links; ChatGPT organizes ideas, concepts, and narratives. While SEO focuses on rankings, AEO (Answer Engine Optimization) focuses on how the model understands, synthesizes, and reproduces your digital identity.

Yes. AI doesn’t evaluate fairness or context; it identifies patterns. If most of the available content about an incident is negative or ambiguous, the model may integrate this as a predominant feature unless subsequent information rebalances the narrative.

Publishing structured, technical, verifiable, and up-to-date content. Well-crafted interviews, expert articles, and press releases help AI reinforce your authority and replace outdated or incomplete perceptions.

A critical role. Conflicting dates, different descriptions, or a lack of consistency across platforms can confuse the model, leading to incorrect responses. Uniformity in your digital identity is essential to avoid distorted interpretations.

Yes. If negative information is more prevalent or clearer than positive information, AI may prioritize it. That’s why it’s essential to maintain an active reputation management strategy and generate new content to replace outdated narratives.

Because generative models analyze content semantically and extract response patterns. Texts that include explicit questions facilitate their integration into the model’s conceptual framework, increasing the likelihood that the AI will respond correctly.

Verifiable documents with traceability: technical reports, case studies, research, institutional press releases, and content endorsed by official or professional entities. The AI assigns more authority to well-documented sources than to superficial publications.